Work Experience

|

|

Chung-Yeon Lee I'm a research scientist at Surromind in Seoul, where I lead a small research team focused on multimodal, physical, and generative AI models and their applications across various domains. At Surromind, I've worked on sound- and vibration-based fault diagnosis systems, service and field robot learning, and generative models (LLM, VLM, VLA), among others. I did my PhD at Seoul National University, where I was advised by Byoung-Tak Zhang. |

|

ResearchI'm interested in bridging AI and robots, aiming to build intelligent physical agents capable of spatial reasoning, high-level planning, and adaptive interaction in complex real-world environments. |

|

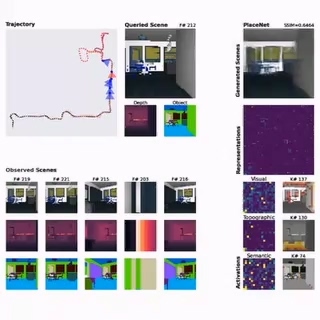

PlaceNet: Neural Spatial Representation Learning with Multimodal Attention

Chung-Yeon Lee, Youngjae Yoo, Byoung-Tak Zhang International Joint Conference on Artificial Intelligence (IJCAI), 2022 code / paper / video

A multimodal attention-based neural representation model that integrates RGB, depth, and semantic information to enable generalizable spatial representations and novel-view rendering across diverse indoor environments. |

|

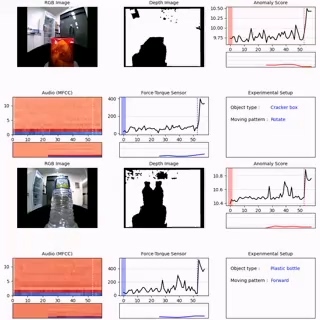

Multimodal Anomaly Detection based on Deep Auto-Encoder for Object Slip Perception of Mobile Manipulation Robots

Youngjae Yoo*, Chung-Yeon Lee*, Byoung-Tak Zhang International Conference on Robotics and Automation (ICRA), 2022 paper / video

A deep autoencoder framework combining RGB, depth, audio, and force-torque sensor data to detect object slip anomalies in mobile manipulation robots by learning latent representations of normal conditions and identifying deviations in real-world noisy environments. |

|

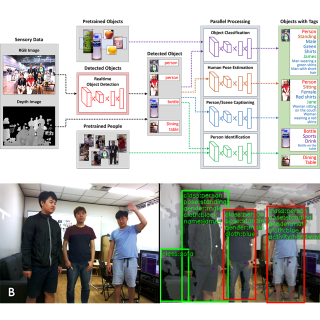

Visual perception framework for an intelligent mobile robot

Chung-Yeon Lee, Hyundo Lee, Injune Hwang, Byoung-Tak Zhang International Conference on Ubiquitous Robots and Ambient Intelligence (UR), 2020 paper / video ROS-based visual perception framework that integrates various deep learning methods for object recognition, person identification, human pose estimation, scene captioning, and object-aware navigation. |

|

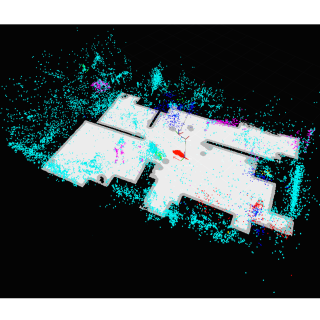

Spatial Perception by Object-Aware Visual Scene Representation

Chung-Yeon Lee, Hyundo Lee, Injune Hwang, Byoung-Tak Zhang ICCV 2019 Workshop on Deep Learning for Visual SLAM, 2019 paper A spatial perception framework for autonomous robots that enhances traditional geometric scene representations by integrating semantic object-aware features, thereby improving map generation, reducing tracking failures, and enabling more reliable place recognition in both home environments and large-scale indoor datasets like ScanNet. |

|

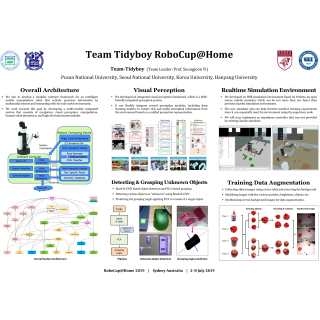

Tidyboy

Advanced Robotics, 2022 RoboCup 2021: Robot World Cup XXIV, 2022 Youtube videos Team Tidyboy is a robotics challenge team dedicated to solving home service tasks by integrating multiple AI models, including verbal and nonverbal human-robot interaction, 3D perception of known and unknown objects, and manipulation of various items through coordinated use of both the manipulator and the robot’s base. |

|

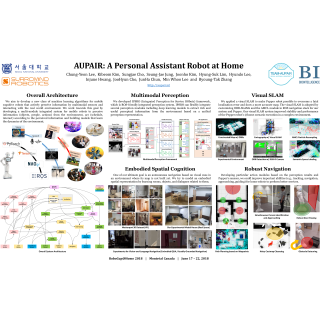

Aupair

AAAI Conference on Artificial Intelligence (AAAI), 2018 Youtube videos Team Aupair aims to develop intelligent mobile cognitive robots with a machine learning. We envision a new paradigm of service robot with state-of-the-art deep learning methods to carry out difficult and complex real world tasks. We propose a novel integrated perception system for service robots which provides an elastic parallel pipeline to integrate multimodal vision modules, including state-of-the- art deep learning models. On top of that, we deploy highly sophisticated modules such as socially-aware navigation, visual question-answering, and schedule learning. |

|

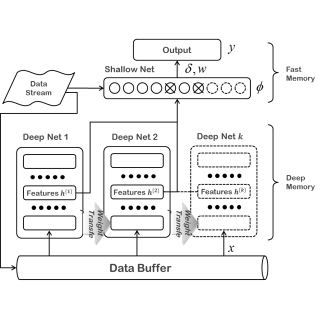

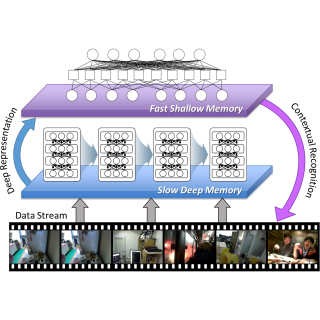

Dual-memory neural networks for modeling cognitive activities of humans via wearable sensors

Sang-Woo Lee, Chung-Yeon Lee, Dong-Hyun Kwak, Jung-Woo Ha, Jeonghee Kim, Byoung-Tak Zhang Neural Network, 2017 International Joint Conference on Artificial Intelligence (IJCAI), 2016 We propose a dual-memory deep learning architecture for lifelong learning of everyday human behaviors from non-stationary data streams, combining a slow-changing global memory with a fast-adapting local memory, and demonstrate its effectiveness on real-world datasets including image streams and lifelogs collected over extended periods. |

|

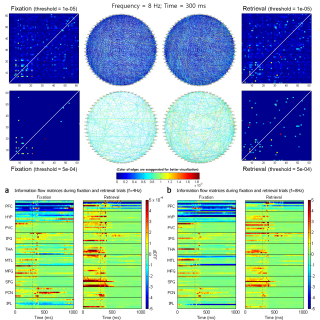

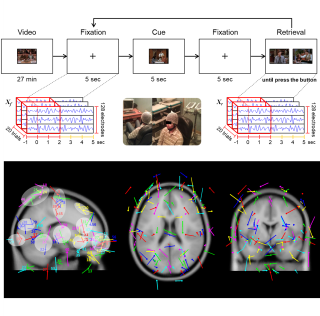

Effective EEG Connetivity Analysis of Episodic Memory Retrieval

Chung-Yeon Lee and Byoung-Tak Zhang Annual Conference of the Cognitive Science Society (CogSci), 2014 paper Here we investigate the information flow network of the human brain during episodic memory retrieval. We have estimated local oscillation amplitudes and asymmetric inter-areal synchronization from EEG recordings in individual cortical anatomy by using source reconstruction techniques and effective connectivity methods during episodic memory retrieval. The strength and spectro-anatomical patterns of these inter-areal interactions in sub-second time-scales reveal that the episodic memory retrieval involves the increase of information flow and densely interconnected networks between the prefrontal cortex, the medial temporal lobe, and some subregions of the parietal cortex. In this network, interestingly, the SFG acted as a hub, globally interconnected across broad brain regions |

|

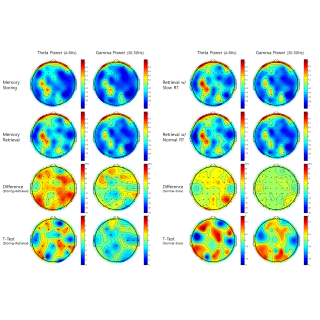

Neural correlates of episodic memory formation in audio-visual pairing tasks

Chung-Yeon Lee, Beom-Jin Lee, and Byoung-Tak Zhang Annual Conference of the Cognitive Science Society (CogSci), 2012 paper We demonstrate a memory experiment that employs audio-visual movies as naturalistic stimuli. EEG recorded during memory formation show that oscillatory activities in the theta frequency bands on the left parietal lobe, and gamma frequency bands on the temporal lobes are related to overall memory formation. Theta and gamma power of the frontal lobes, and gamma power of the occipital lobes were both increased during retrieval tasks. |

|

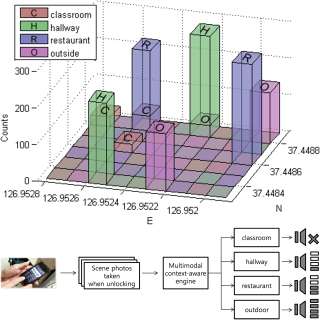

Place awareness learned by mobile vision-GPS sensor data

Chung-Yeon Lee, Jung-Woo Ha, Beom-Jin Lee, Woo-Sung Kang and Byoung-Tak Zhang NIPS 2012 Workshop on Machine Learning Approaches to Mobile Context Awareness, 2012 paper Recognizing the type of place a person is in (e.g., classroom, hallway, restaurant, outdoor) using photographs taken by a smartphone camera combined with GPS information, by training a modified SVM classifier where image features (SIFT) are the inputs and GPS serves as weighting information. |

|

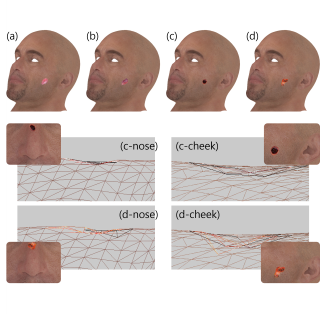

Multi-layer structural wound synthesis on 3D face

Chung-Yeon Lee, Sangyong Lee, and Seongah Chin Journal of Computer Animation and Virtual Worlds, 2011 International Conference on Computer Animation and Social Agents (CASA), 2011 paper Here, we propose multi-layer structural wound synthesis on a 3D face. The approach first defines the facial tissue depth map to measure details at various locations on the face. Each layer of skin in a wound image has been determined by hue-based segmentation. In addition, we have employed disparity parameters to realise 3D depth in order to make a wound model volumetric. Finally, we validate our methods using 3D wound simulation experiments. |

|

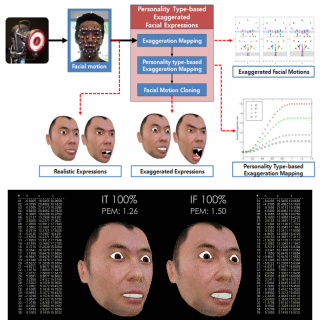

An automatic method for motion capture-based exaggeration of facial expressions with personality types

Seongah Chin, Chung-Yeon Lee, Jaedong Lee Virtual Reality, 2011 paper We propose an automatic method for exaggeration of facial expressions from motion-captured data with a certain personality type. The exaggerated facial expressions are generated by using the exaggeration mapping (EM) that transforms facial motions into exaggerated motions. As all individuals do not have identical personalities, a conceptual mapping of the individual’s personality type for exaggerating facial expressions needs to be considered. The Myers–Briggs type indicator, which is a popular method for classifying personality types, is employed to define the personality-type-based EM. Further, we have experimentally validated the EM and simulations of facial expressions. |

|

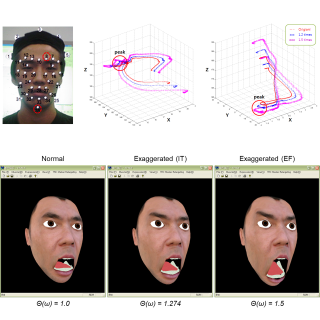

Personal style and Non-negative matrix factorization based exaggerative expressions of face

Seongah Chin, Chung-Yeon Lee, Jaedong Lee International Conference on Computer Graphics & Virtual Reality (CGVR), 2009 paper We propose a method to generate exaggerated facial expressions based on individual personal styles by applying Non-Negative Matrix Factorization (NMF) to decompose facial motion data, then enhancing expression intensity using a style-dependent exaggeration rate (SER). Experiments show that this approach effectively produces subtle, personalized exaggerations across various basic emotions such as happiness, sadness, and surprise. |

|

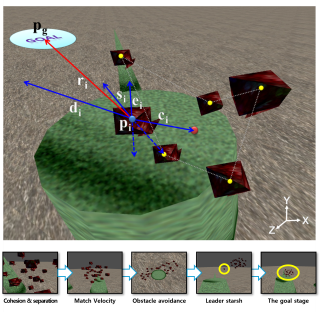

Leadership and self-propelled behavior based autonomous virtual fish motion

Seongah Chin, Chung-Yeon Lee, Jaedong Lee International Conference on Convergence and hybrid Information Technology (ICCIT), 2008 paper We present a method of shoal motion with an effective leadership of autonomous virtual fish. Shoal motion is led by a leader and computed by five steering behavior vectors including cohesion, separation, velocity, escape and goal vectors. Through experiments, we demonstrate that a leader of the motion simulation has great effect in leadership and great accuracy to guide the group. |

|

*Last update in September 2025 |